Mastering Generative AI: From LLMs to Prompt Engineering Fundamentals

Imagine unlocking a tool that crafts new text, images, or even music from simple instructions. That’s the power of generative AI. In this post, we’ll build on AI basics to explore how large language models work and why prompt engineering changes everything for you.

Introduction: The Second Week of AI Understanding

Recap of Foundational AI Concepts

AI means creating digital brains that mimic smarts. Right now, all AI we use falls under artificial narrow intelligence, or ANI. It handles specific tasks but can’t think like a full human brain, which would be artificial general intelligence, or AGI. AGI doesn’t exist yet.

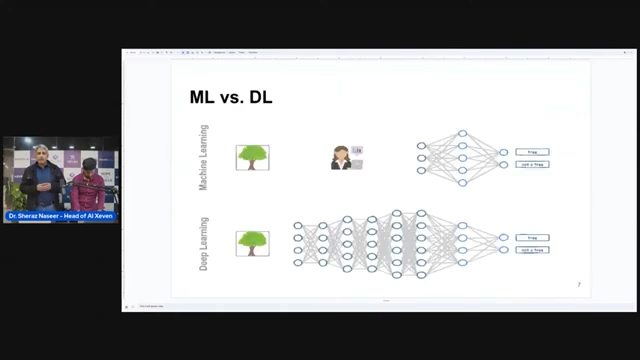

We looked at main branches like machine learning and deep learning. Machine learning lets AI learn rules from past data without you spelling them out. Deep learning takes this further with networks that copy how our brains process info.

Data comes in two types: structured and unstructured. Structured data fits in tables with rows and columns, like spreadsheets. Unstructured data includes text, images, or speech that doesn’t fit neat boxes.

Labeled data is key for supervised learning. You add tags to data, like marking an image as “cat” or a sentence as “positive.” This helps the model learn patterns. Without labels, you shift to unsupervised or reinforcement learning.

Introducing the Three Pillars of Machine Learning

Supervised learning uses labeled data to train models. It predicts outcomes, like spotting spam emails. You feed it examples with answers, and it gets better over time.

Unsupervised learning works without labels. It finds hidden groups in data, like sorting potatoes by size in a basket. You spot clusters based on similarities, even if you don’t name them.

Reinforcement learning mimics trial and error. An agent interacts with its world, chasing rewards. Think of a game where the goal is high scores without losing lives. It tries actions, learns from wins, and refines moves to max out points.

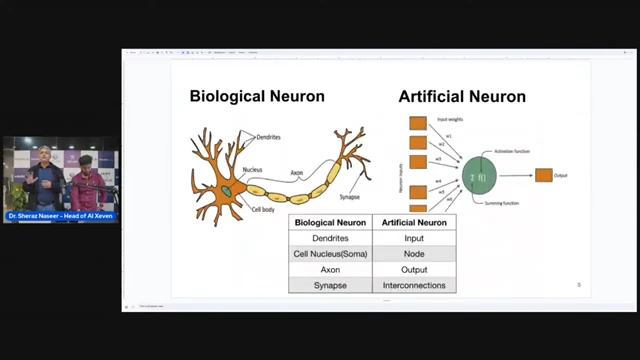

These pillars form machine learning’s base. Deep learning builds on them by using neural networks. These networks draw from biology, where billions of neurons fire to make decisions. In AI, we create fake versions to handle tough tasks.

Section 1: Deep Learning and the Rise of Complex Models

Neural Networks: Structure and Function

Neural networks start with an input layer that takes your data, like an image or text. Hidden layers in the middle crunch numbers to spot patterns. The output layer delivers results, such as “this is a dog.”

Picture a jumbled page you slowly unfold. Each hidden layer straightens out the mess step by step. This turns complex inputs into clear outputs the model can use.

Why call it deep learning? More layers mean deeper processing, like digging into details. Early researchers picked the name to sound advanced and snag funding. It stuck because these models solve hard problems others can’t.

The Necessity of Data and Computational Power

Good data drives everything. Build the best model, but feed it junk, and you get junk results. That’s the garbage in, garbage out rule. Quality data sets the model’s accuracy.

Deep models need huge computing power. More layers and neurons mean more math to run. You also require tons of data to train them right.

Without strong hardware, training stalls. This push for power shapes how we build and use these tools today.

Discriminative vs. Generative AI

Discriminative AI sorts things. It checks if an image shows a cat or not. Or it labels a sentence as positive or negative. Speech? It tells male from female voices or Urdu from French.

Generative AI creates fresh stuff. It makes new images or text that didn’t exist before. To generate, it first discriminates—like a kid drawing an apple and bananas. The child knows the difference, then draws them.

This creation relies on understanding basics first. Generative models blend discrimination with invention to produce novel outputs.

Section 2: Generative AI Domains and Core Models

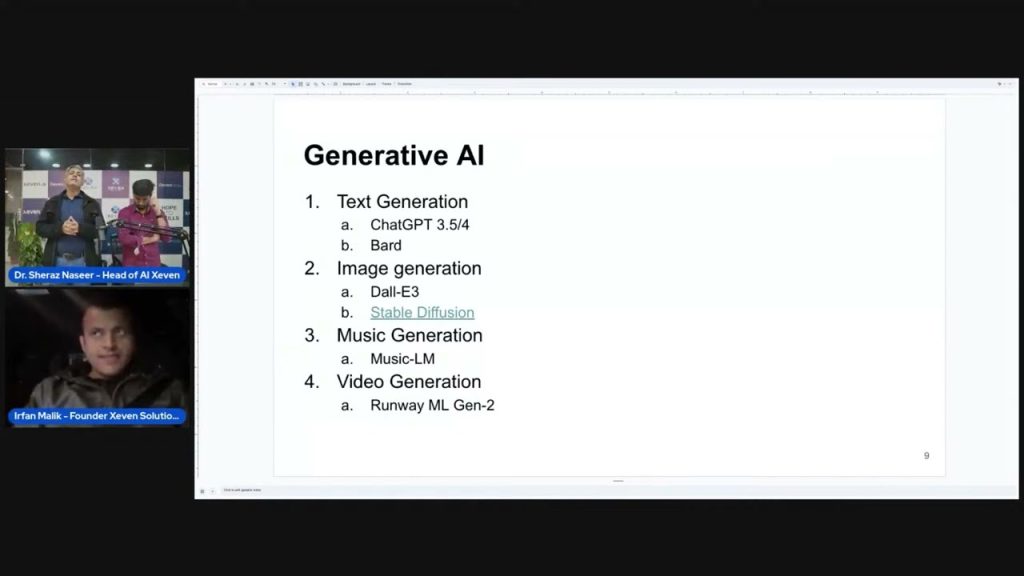

Key AI Sub-Domains Addressed by Generative AI

Generative AI shines in natural language processing, or NLP. It handles text, powering tools like chatbots.

Computer vision covers images and videos. Videos are just moving pictures, so models generate or edit them.

Speech recognition deals with audio waves. It creates new sounds, like music symphonies from patterns.

These areas link back to unstructured data. Generative tools adapt to each, making your work easier. For earning potential, mastering them opens doors.

The Cornerstones of Modern Generative AI

Two big models lead the way: large language models and diffusion models. Diffusion models, often latent ones, build images from noise.

You can grab free versions on Hugging Face. Download, run, and test them yourself.

Large language models focus on text under NLP. They’re built on generative pre-trained transformers, or GPT.

Examples include GPT-3 with 175 billion parameters. Newer ones like GPT-4 or open-source Llama 2 pack even more. These parameters act like neurons, boosting smarts.

Understanding Large Language Models (LLMs)

LLMs process vast text data. Their GPT architecture lets them predict and create.

Billions of parameters mean deep understanding. GPT-3’s IQ was near human levels at 155. GPT-4 jumped tenfold in just months.

This growth ties to compute power. More parameters, higher IQ. In time, you’ll plug LLMs into robots or smart homes for real money-makers.

Section 3: The Mechanics of LLM Text Generation

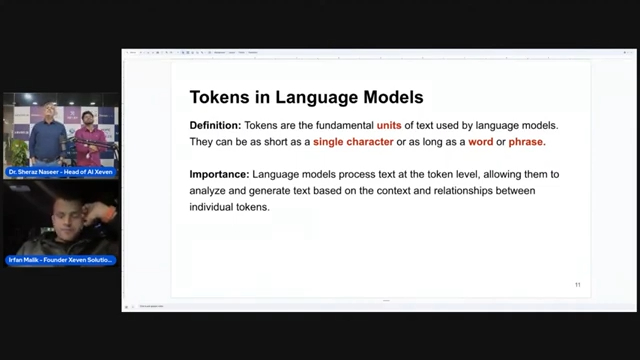

Tokenization: The LLM’s Language

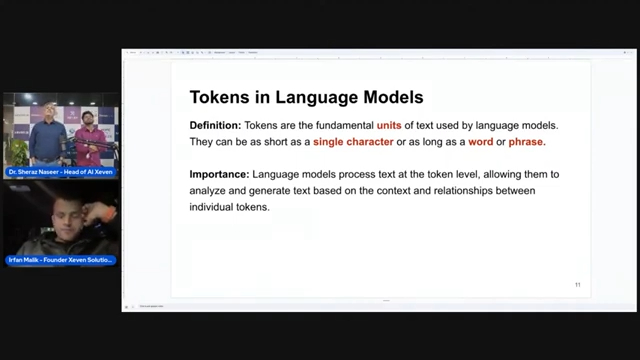

Tokenization splits text into tokens, the model’s basic units. A sentence breaks into three or four tokens on average.

Tokens can be words, parts of words, or even characters. The model’s vocabulary is its token list—everything it knows.

Give a prompt as seed input. The LLM processes tokens to generate output. Limited tokens mean careful word choice.

LLM Inference: Predictive Probability

LLMs predict the next token based on what came before. “The sky is” might lead to “blue” with high odds.

Context matters. “The orange is a tasty” points to “fruit.” But “The orange is a bright” leans toward “color.”

The model scans its vocabulary, picks the top probability, and adds it. It repeats, building sentences word by word.

This chain creates full responses. Like reading left to right, order shapes meaning. Wrong sequence? The output flops.

Context Window: The Model’s Attention Span

Every LLM has a context window—a token limit for input. GPT-3.5 handles 4,000 tokens; GPT-4 reaches 32,000.

Exceed it, and the model forgets or fails. It’s like overwhelming a person with too much at once.

In business, track token use for costs. Input plus output tokens add up. Estimate for clients, but add disclaimers—bills can double. This saves money and builds trust.

Section 4: Prompt Engineering: Directing the AI Powerhouse

The Shift from Answering to Questioning

Prompt engineering crafts inputs to guide LLMs. With token limits, smart prompts get the best results.

Asking right beats knowing answers. An LLM’s high IQ waits for your clear question. Master this, and you program it with words.

It’s a new skill in AI. Practice turns vague ideas into sharp outputs fast.

The Anatomy (Anatomy) of a High-Quality Prompt

Think of prompting like talking to a wise elder. Give background, speak clear, and stay on point.

Start with persona: “Act as a lawyer.” This tunes the model to that role.

Define the task next. Explain your goal, like building a database schema.

Break it into steps: “First, review data. Then, suggest tables.” Add limits, like “Use only SQL basics.”

Specify output: “List in bullets.” This shapes the response perfectly.

Realizing the Business Value of Prompt Engineering

Good prompts unlock productivity now. No years of waiting—you generate ideas and products quick.

Use them for freelancing or apps. In meetings, know token costs to win deals.

Next, we’ll practice prompts hands-on. This skill pays off in text and beyond.

Conclusion: Next Steps in the AI Journey

We’ve covered AI foundations, from data types to machine learning pillars. Deep learning’s neural networks power complex tasks, but data and compute are key.

Generative AI splits into discriminative and creative sides. LLMs, built on GPT, use tokens and context windows to generate text via predictions.

Prompt engineering directs this power. Assign roles, detail tasks, set limits, and format outputs for top results.

In the next session, dive into real prompts for LLMs. We’ll also touch diffusion models for images, like Stable Diffusion. Start practicing today—your AI skills will grow fast. Keep learning, and good luck!