Harnessing Generative AI: A Deep Dive into DALL-E 2, DALL-E 3, and Hugging Face Models

Imagine typing a simple sentence like “a fluffy cat in sunny grass” and watching a vivid image appear on your screen. That’s the magic of text-to-image AI models. These tools have changed how we create visuals, pulling from words to craft pictures that feel real. DALL-E 2 and DALL-E 3 lead the pack, built on smart tech like diffusion models. They take your prompt and turn noise into art. We’ll explore their inner workings, hands-on use, and how open-source options like Hugging Face fit in. This guide helps you grasp the basics and start building your own projects.

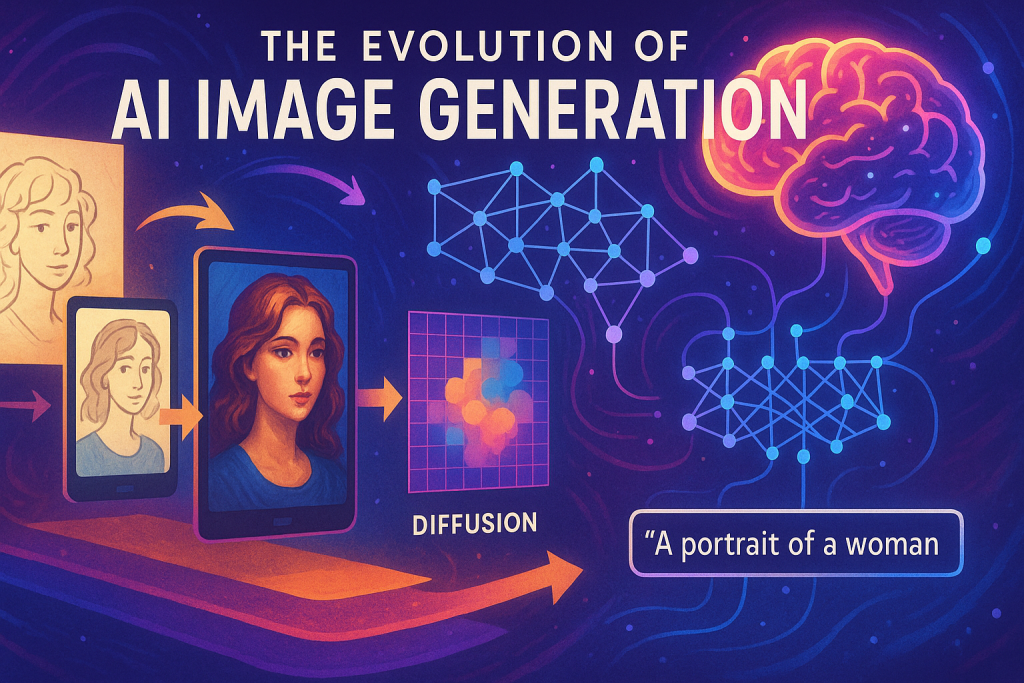

Introduction: The Evolution of AI Image Generation

The Rise of Text-to-Image Models

Text-to-image models mark a big step in AI art. Early versions needed lots of setup, but now tools like DALL-E make it easy. You describe a scene, and the AI generates it fast. Diffusion models power most of these, starting with random dots and refining them step by step into clear images. Think of it like sculpting fog into a statue. This tech powers DALL-E 2 and 3, letting anyone create pro-level visuals without drawing skills.

Understanding the Core Architecture: CLIP and Diffusion

DALL-E relies on CLIP for linking words to pictures. CLIP trains on millions of image-text pairs from the web. It learns to match descriptions to visuals, creating vectors that align closely. Diffusion handles the image building, guided by those vectors. Together, they form a pipeline: text in, image out. This setup shines in handling complex ideas, like emotions or scenes, with high accuracy.

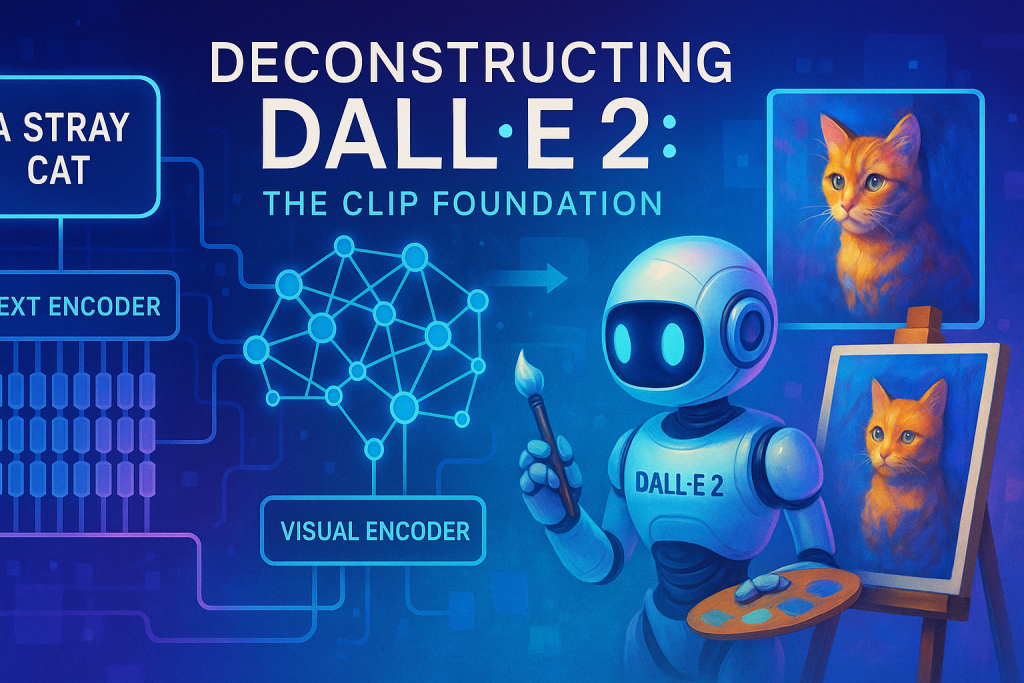

Section 1: Deconstructing DALL-E 2: The CLIP Foundation

How CLIP Enables Text Understanding

CLIP stands for Contrastive Language-Image Pre-training. It takes an image and its caption, then turns both into vectors. These vectors end up very similar if the text matches the picture. For example, feed it a photo of a running cat and the phrase “cat running.” The outputs cluster close in vector space. This bridge lets DALL-E grasp your prompt’s meaning. Without CLIP, text would just be words, not guides for creation.

DALL-E uses CLIP’s text encoder right at the start. You type your idea, and it converts to a vector. That vector steers the whole process. CLIP’s training on vast data ensures it handles diverse languages and styles. You get precise results, even for tricky descriptions.

DALL-E 2’s Encoder-Decoder Framework

DALL-E 2 has two encoders and a decoder. The first encoder, from CLIP, processes text. The second handles any prior image if needed. Then, the GLIDE decoder—a diffusion model—takes over. It starts with noise and adds details based on the vector. This creates the final image.

The framework keeps things efficient. Encoders prepare data; the decoder builds from it. You won’t dive deep into code yet, but know it’s modular. Swap parts, and you tweak outputs. This setup fixed early AI art flaws, like mismatched colors or shapes.

Practical Limitations and Resolution Constraints

DALL-E 2 caps at 1024×1024 pixels. Higher sizes force you to DALL-E 3. Early versions struggled with details, like human faces or finger counts. Eyes might blur; hands could have extra digits. Training data improved over time, but basics showed gaps. Prompts needed care to avoid odd results.

Cost matters too. Larger images cost more per generation. Test small first to save credits. These limits pushed updates, making later models stronger. You learn to pick tools that fit your needs.

Section 2: Advancements with DALL-E 3: Quality and Specification

Key Quality Improvements Over DALL-E 2

DALL-E 3 fixes many DALL-E 2 issues. Faces now look sharp, with aligned eyes and proper features. Fingers count right—five, not six or seven. Complex prompts render better, catching small details like shadows. Training on better data boosts realism.

Quality jumps in lighting and depth. A sunny cat scene shows real sunlight rays and soft shadows. Edges stay crisp on main objects. This makes images feel alive, not flat. You notice the upgrade in everyday tests.

Understanding Resolution Scaling and Minimum Dimensions

DALL-E 3 starts at 1024×1024, no smaller options. You can scale up to 2K or 4K for sharper work. This suits prints or videos. But higher means more compute power and cost. Stick to minimum for quick tests.

Scaling helps pros, like designers. A basic prompt yields pro results. Experiment with sizes to see trade-offs. Higher res shines for detailed art.

Cost-Effectiveness and API Usage Considerations

Same-size images cost different between models. DALL-E 2 is cheaper for basics; DALL-E 3 charges more for extras. Check your budget before big runs. APIs like OpenAI handle the heavy lifting, so no local setup needed.

Weigh features against price. Great output won’t help if it’s too pricey. Start small, scale as needed. This keeps projects affordable.

Section 3: Practical Implementation and Code Execution

Setting Up the Development Environment (Google Colab GPU Allocation)

Use Google Colab for free GPU access. Click “Change runtime type” and pick T4 GPU. It’s free and speeds things up. Save, then connect. Check resources: you’ll see GPU RAM listed.

CPU won’t cut it for these models—they crawl. GPU handles the math fast. This setup lets you run without a beast machine. Always verify resources before code.

Executing Image Generation Functions (DALL-E API Calls)

Import OpenAI library first. Set your API key securely. Define a function with prompt, size, and image count. Call client.images.generate() with params. Sizes: 256×256, 512×512, or 1024×1024 for DALL-E 2.

Use PIL to save or display results. Raw output is bytes; convert to image format. Run code, and images appear. For multiples, loop through responses and append to a list. This pulls your creations ready to use.

Prompt Engineering for Superior Results: Focus and Detail

Good prompts guide the AI. Say “cute fluffy black cat with blue eyes in sunny field” instead of just “cat.” Add details like “warm sunlight, sharp shadows.” This yields better focus on key elements.

Test variations: pink cat or cartoon style. Descriptive words improve edges and lighting. Why settle for blurry? Craft prompts like stories for top results. Practice builds skill.

- Start with subject: cat.

- Add traits: fluffy, blue eyes.

- Set scene: sunny background.

- Note style: realistic or fun.

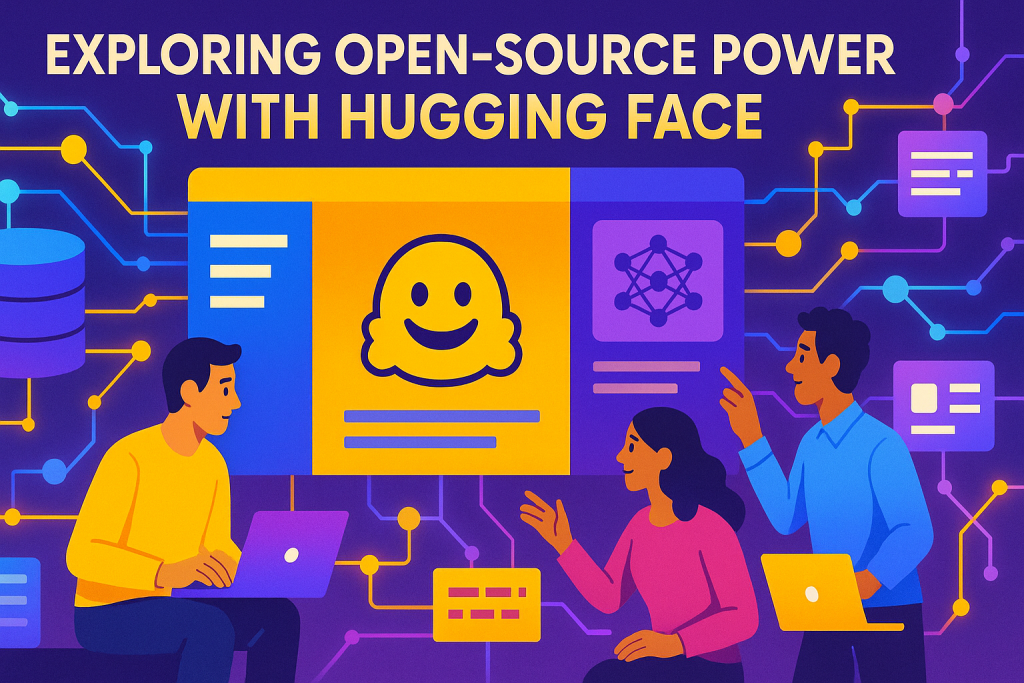

Section 4: Exploring Open-Source Power with Hugging Face

Hugging Face: The Open-Source AI Hub and Its Scale

Hugging Face began in 2016 as a chatbot firm by two French founders. Now, it’s a giant with over 500,000 models. Valued at $5 billion in August 2023, it grew fast with a small team of three at first. Big labs share code here, free for all.

You find everything from text tools to video gens. It’s like a library for AI builders. Growth shows open-source wins.

Navigating the Model Ecosystem: Modalities and Leaderboards

Search by task: text-to-image, sentiment analysis, or video classification. Leaderboards rank by downloads—top ones hit 40 million. CLIP leads with 42.5 million pulls.

Click a category like zero-shot classification. Models filter in. Check sizes and updates. This helps pick winners fast.

- Text-to-image: Generate from words.

- Image-to-text: Describe pics.

- Video tasks: Classify clips.

The Critical Role of Documentation and Research Papers

Read docs for setup and limits. They list datasets and accuracy. Abstracts from papers show biases, like over cartoon styles in training.

Papers reveal strengths, like high scores on certain languages. Skim for key limits. This arms you for client talks—justify picks with facts. Don’t skip; it builds trust.

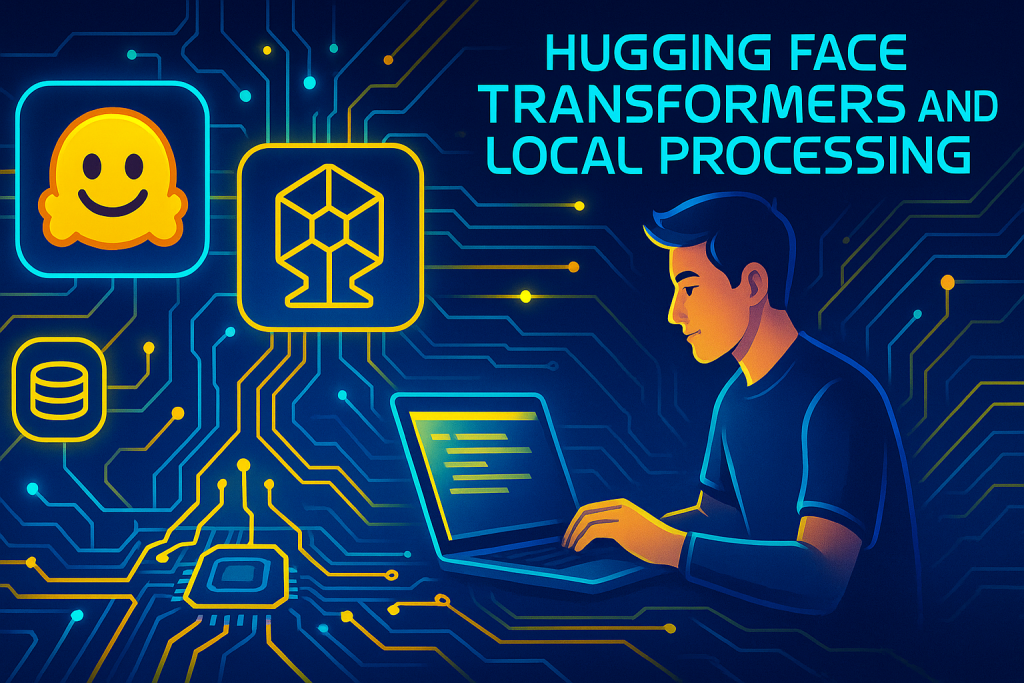

Section 5: Hugging Face Transformers and Local Processing

Introducing the Transformers Library and Pipeline Abstraction

Install transformers via pip. Import pipeline for easy tasks. For sentiment, use pipeline(“sentiment-analysis”). Feed a sentence like “This movie is boring.” It predicts negative with a score.

Pipeline hides complexity. Input text, get output. No need to code the net. Great for quick starts.

Local Processing vs. Cloud API: The Computational Trade-Off

Local runs download models to your machine—1GB or more. Process offline, no API fees. But needs strong hardware.

Cloud like OpenAI offloads to servers. Fast, no local strain. Trade: pay per use versus setup costs. Pick based on scale.

Performance Benchmarking: GPU Dependency and Tensor Execution

Models use PyTorch for tensors—math arrays. GPU crunches them quick; CPU lags. A task takes seconds on GPU, hours on CPU.

Check docs for GPU needs. T4 in Colab works fine. Skip if your rig’s weak. Speed difference changes everything.

Case Study: Text Classification and Sentiment Analysis

Take “This restaurant is awful.” Pipeline labels it negative, score 99%. Another: “I don’t know where I’m going”—negative too. Scores near 1 mean high confidence.

Batch multiples in a list. First positive, second negative. Handles negations well. Real-world use: review analysis.

Section 6: Advanced Model Selection and Future Development

Specific Model Specification vs. Default Pipeline Usage

Default picks auto, but specify like model=”roberta-large-mnli.” It downloads 1.43GB. Run, and it classifies precisely.

Explicit choice tunes results. Test on your data. Defaults save time; customs fit needs.

Assignment: Finding Size-Efficient, High-Accuracy Models

Hunt Hugging Face for small text classifiers. Aim under 500MB with 95% accuracy. Read leaderboards, test 10 sentences.

Compare docs and your runs. Note biases. This hones search skills.

- Search “sentiment analysis.”

- Filter small sizes.

- Test and score.

The Server Cost Dilemma: API Subscription vs. Local Infrastructure

Paid APIs suit low hardware—pay as you go. Local Hugging Face cuts long-term costs but needs GPU investment. For startups, start API; scale to local.

Balance: API for ease, local for control. Your budget decides.

Conclusion: Empowering Your AI Development Path

DALL-E 2 and 3 offer quick text-to-image magic via APIs, handling compute for you. Hugging Face brings open-source freedom, but demands local power for tasks like sentiment analysis. Key wins: better prompts boost DALL-E outputs; model hunting sharpens Hugging Face skills. You’ve seen setups, code, and trade-offs—now apply them.

Explore beyond basics. Try named entity recognition or summarization on Hugging Face. Build apps, test limits, and innovate. Your next project could solve real problems. Dive in today—what image or analysis will you create first?