Mastering Data Science Pipelines: From Pizza Analogy to Hugging Face Implementation

Imagine baking a pizza from scratch. You grab ingredients, slice them up, mix everything, bake it, and serve a hot slice. Now picture doing the same steps over and over, but for handling data in a project. That’s the heart of a data science pipeline—a smart way to turn raw info into useful results without starting from zero each time.

Data science pipelines follow a clear path, much like that pizza recipe. They take messy inputs, clean them, process them, and deliver clean outputs. This setup saves time and cuts errors, letting you focus on big ideas instead of small fixes.

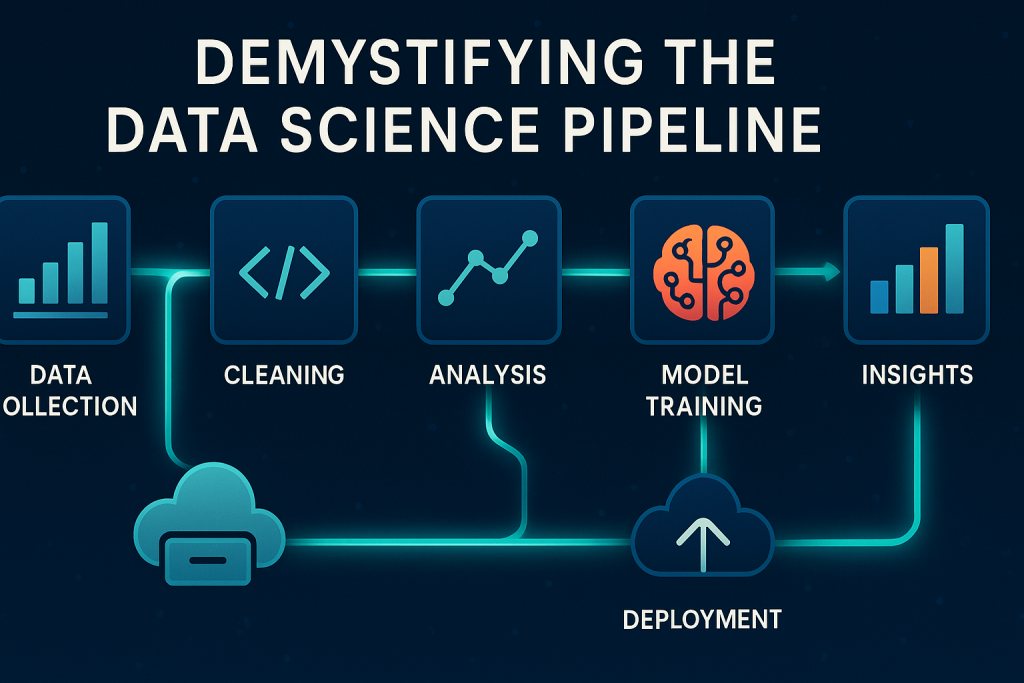

Introduction: Demystifying the Data Science Pipeline

The Pizza Analogy: Understanding Sequence and Structure

Think about making pizza. You start with inputs like dough, cheese, and toppings. These are your building blocks. Next, you preprocess: slice the veggies, grate the cheese, and mix the sauce. No one wants a raw mess on their plate.

Then comes processing. Knead the dough, add layers, and bake at high heat. This pulls out flavors and shapes the result. Finally, present it—cut slices, add herbs, and serve hot. Each step builds on the last, creating something whole.

In data science, it’s similar. Data acts as your ingredients. Clean it first, extract key features, run models, and visualize findings. This sequence ensures reliable results every time. Skip a step, and your “pizza” falls flat.

What Exactly is a Data Science Pipeline?

A data science pipeline is a chain of steps that handles data from start to finish. It grabs inputs, cleans them, analyzes them, and shares outputs in a clear way. Tasks like sentiment analysis or image checks fit right in.

These pipelines automate repeat work. No more manual tweaks for every project. They make complex jobs simple, like a recipe card for pros.

You can tweak them too. Adjust early steps for better data or later ones for sharper views. This flexibility keeps things fresh.

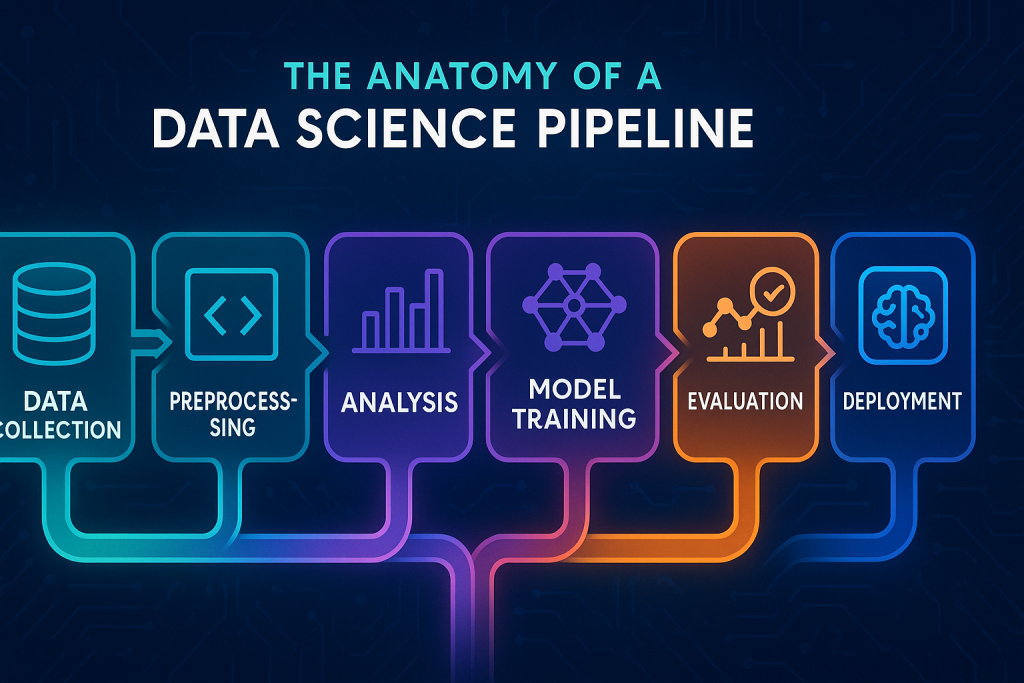

Section 1: The Anatomy of a Data Science Pipeline

Essential Stages in Sequence

Pipelines kick off with input data. In computer science or data work, this means collecting raw info like text or numbers. It’s your starting point.

Preprocessing follows. Clean the data—remove noise, fill missing spots, and fix errors. Dirty data leads to bad calls, so this step matters.

Next, process it. Pull out features, spot key columns, and run analysis. This could mean training a model for regression or classification. Bake in the insights here.

After that, evaluate and predict. Test the model, check accuracy, and generate results. Visualize them with charts for easy reading.

Pipelines as Life Simplifiers: Real-World Automation Examples

Pipelines pop up everywhere. Take WordPress developers. Installing a theme runs steps like feature pulls, analysis, and setup. Output? A ready site with logs.

Windows installation works the same. Multiple actions happen in order: checks, loads, and boots. No need to micromanage each bit.

Even office tasks fit. Tell a chef to make pizza—one command triggers the full recipe. Pipelines handle the details so you don’t.

Customization and Iteration: Adjusting the Output

You can tweak pipelines at key spots. If the taste is off, change ingredients early. For data, adjust inputs or cleaning to fix issues.

At the end, refine presentation. Good taste but plain look? Add visuals. This keeps outputs sharp without rebuilding everything.

Careful changes matter. Blind tweaks waste time. Smart use empowers you to own the process.

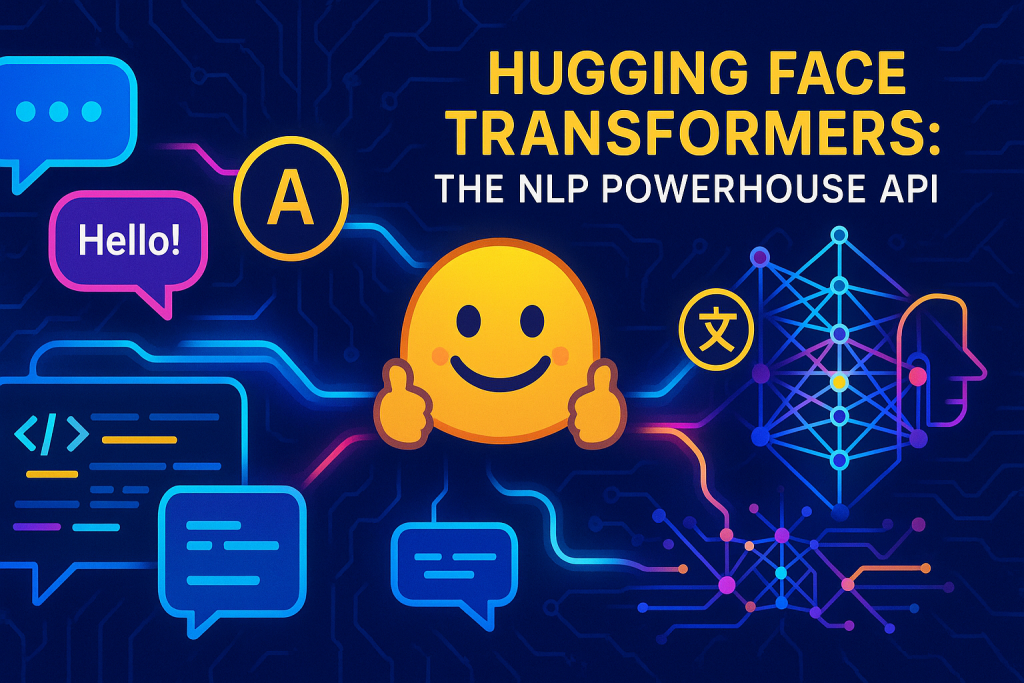

Section 2: Hugging Face Transformers: The NLP Powerhouse API

Hugging Face Hub: A One-Stop Shop for State-of-the-Art Models

Hugging Face is a goldmine for AI tools. It holds trained models from top schools and tech firms. All free, ready to grab.

Use their APIs for quick starts. The Hub offers pipelines for easy tasks. Transformers handle language work, while Diffusers tackle images.

With a few code lines, access world-class models. No need to build from scratch.

Implementing Text Summarization with Default Models

Text summarization shortens long paragraphs while keeping core ideas. Use Hugging Face’s pipeline for this.

Import transformers, then create a pipeline with ‘summarization’. Feed it text about Hugging Face features. It spits out a tight summary.

No model picked? It grabs a default one. This saves setup hassle.

Actionable Tip: Use f-strings in Python for inputs. Add ‘f’ before your string, wrap variables in braces. They insert values smoothly.

Controlling Summary Length: Min and Max Arguments

Set min_length and max_length to guide output size. For a long text on tokenization, aim for 20 to 60 words.

Short inputs limit changes. A 50-word paragraph won’t shrink much. But for 500 words, it shines—pulls key points without fluff.

Focus stays on meaning. Tools like this excel with big texts, boosting clarity fast.

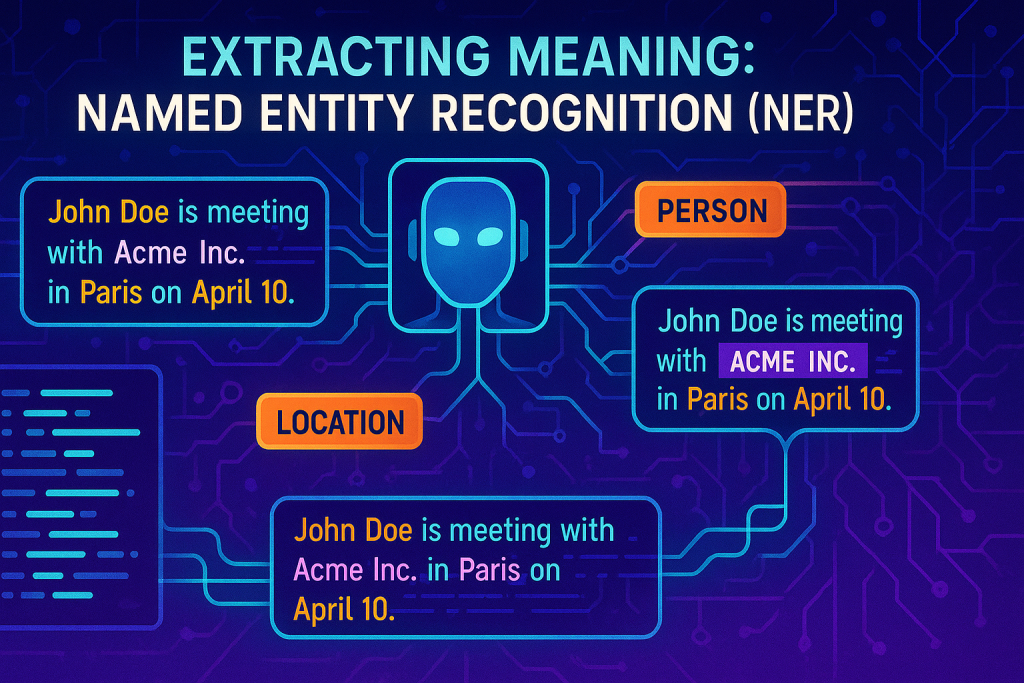

Section 3: Extracting Meaning: Named Entity Recognition (NER)

Defining NER: Identifying Key Entities in Text

Named entity recognition spots key items in text. Think persons, places, groups, dates, or money. It labels them clearly.

Take “Farhan is going to office.” It flags Farhan as a person and office as a location.

This pulls structure from chaos. Use it to map relations between entities.

Real-World Applications of NER Beyond Simple Labeling

NER aids healthcare. Track patients, doctors, drugs in bills. No more manual scans.

In legal docs, extract terms and facts. Speed up reviews.

For self-driving cars, it spots objects from text data. Blind aids or foreign departments benefit too.

Expert Reference: Read model cards on Hugging Face. Brainstorm uses—aim for 10 ideas daily. Like Jack Ma says, one idea changes everything. Train your mind to spot chances.

Analyzing NER Output: Model Bias and Tokenization Nuances

Run pipeline(‘ner’) on “Obama is going to New York.” Output shows entity types, scores, and spots.

It tags “Obama” as PERSON with 99% confidence. “New” and “York” split as separate LOCATIONS—also high scores.

Why the split? Default models train on English data, tokenizing words oddly. For local names like Lahore, results dip. Hunt fine-tuned models for better fits.

Section 4: Computer Vision Pipelines: Image Classification

The Classification Task: Image Input to Class Output

Image classification takes a photo and names it. Input a dog pic; output says “dog” with a score.

Good models go deeper—spot puppy or breed like Golden Retriever.

Scores show trust levels. From 0 to 1, higher means surer.

Practical Setup in Google Colab

In Colab, use PNG files only. Upload via the left panel’s Files section. Click upload, browse, and pick your image.

Files vanish after sessions end. For sensitive stuff, avoid or download quick.

Actionable Tip: Right-click uploaded files, copy path. Paste into code for exact links—no guesswork.

Model Specificity and Deep Predictions: Beyond the Basic Class

Pick a model like google/vit-base-patch16-224. Feed the image path.

Output lists top predictions. For a puppy, it might say “puppy” at 30%, plus “golden retriever.”

Most models give top five labels with scores. This covers close calls, like cat vs. kitten.

Section 5: Leveraging Hardware: GPU, PyTorch, and CUDA

The Necessity of GPU Acceleration

CPUs handle tough tasks well but slowly with few cores. GPUs shine in parallel work, with thousands of simpler cores.

Picture solving 1,000 easy math problems. Hire eight PhDs (CPU) or thousands of students (GPU). The crowd wins for speed.

Tensors—just stacked matrices—thrive here. Linear ops like adds or multiplies run parallel.

PyTorch: The Bridge Between Code and Hardware

PyTorch checks your setup. CPU only? It adapts. GPU there? It picks the fast lane.

Use tensors for data. Choose float16 over float32 to cut memory use—half the space for big models.

It auto-handles shifts, keeping code clean.

CUDA: The Essential Translator

CUDA translates PyTorch code for NVIDIA GPUs. No NVIDIA? It fails—errors pop up.

Like a bilingual aide between languages. Only works for one hardware family.

Stick to CPU if no match. But for heavy lifts, NVIDIA plus CUDA speeds things up huge.

Section 6: Advanced Pipelines: Image Generation and Translation

Stable Diffusion: Text-to-Image Generation with Diffusers API

Diffusers API fits diffusion models. These reverse noise addition to craft images from prompts.

Prompt “astronaut riding a horse.” Stable Diffusion v1.5 generates it step by step.

Actionable Tip: Set torch_dtype to float16. It loads the model with less RAM—key for 4GB setups.

Save as PNG. Download from Colab for keeps.

Translation Pipelines: Breaking Language Barriers

Translation turns English to French or Urdu. Set task like ‘translation_en_to_fr’.

Apps help hospitals with foreign patients. Or translate papers in odd tongues.

Sci-fi universal translators? We’re close—AI cuts language walls.

Model Selection and Resource Management in Translation

Check model cards for languages and size. A 13GB one? Colab crashes on 12GB RAM.

Pick small ones for local runs. Test downloads and likes for popularity.

Watch resources. Overload leads to hangs—match tools to your setup.

Conclusion: Building Smartly with Pipelines

Data pipelines turn chaos into order, like a trusted recipe. From pizza steps to Hugging Face code, they automate the grind.

Key wins: Sequence keeps work steady. Hugging Face opens doors to top models. GPUs and CUDA boost speed for tensor tasks. Always check resources to avoid crashes.

Look ahead—AI pipelines could erase barriers, from languages to images. Start small: try a summarization task today. Build one idea at a time. Your next breakthrough waits.